Pathology Foundation Models (Pathology FMs) are a powerful tool for generating informative image embeddings from histology images (whole slide images or WSI). These embeddings can be used for various downstream tasks, including classification, segmentation, and object detection.

Since WSIs are very large and can easily exceed $\sim\frac{10\text{ mm}}{0.5\text{ µm}/\text{px}} = \small{20{,}000\text{ px}}$ per dimension, they are typically divided into smaller patches (called tiles). The tiles are passed through the FM to generate tile embeddings, which can then be aggregated depending on the target application.

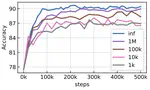

In our first work, Towards large-scale training of pathology foundation models, we developed a scalable framework for training pathology FMs with streaming tiles cropped from original WSIs. We also investigated how the FM performance scales with the amount of training data.

In this project, we trained state-of-the-art level FMs using open-access data such as TCGA, GTEx, and CPTAC, as well as whole slide images from the Netherlands Cancer Institute:

- TCGA – 12k WSIs

- GTEx – 25k WSIs

- CPTAC – 7k WSIs

- NKI-80k – 80k WSIs

In our latest work, Training state-of-the-art pathology foundation models with orders of magnitude less data, we further improved the training workflow and showed that it is possible to train pathology FMs that match or exceed the performance of today’s best models with even up to ${\sim}100\times$ less data.

Key findings

In short, we have shown that:

- All recent pathology FMs are close, and even 12k TCGA WSIs are enough to outperform most of them: Even our model trained just on TCGA (12k WSIs) is on par with Virchow2, one of the strongest publicly available pathology FMs, despite using $256{\times}$ less data;

- We also explored various post-training techniques and demonstrated the potential of fine-tuning on high-resolution images for further boosting the FMs;

- Finally, our best FM trained on 92k WSIs (TCGA + NKI-80k) and fine-tuned on high-resolution tiles demonstrated an average top-1 performance on a wide range of downstream tasks.

This suggests that scale isn’t everything – even relatively small datasets can yield impressive outcomes.

Moreover, another view on these results would be to interpret it as if the current self-supervised learning methods (e.g., DINOv2) may not fully capitalize on extremely large datasets, extracting mostly the information already present in relatively small datasets.

Read more: https://arxiv.org/abs/2504.05186